Top Stories

Scientists May Soon Predict Earthquakes with 80% Accuracy Two Days in Advance

Published

2 months agoon

There are many ways that Earth hints an earthquake is coming. Sometimes it’s through the groundwater level. In the past, scientists have used radon to predict seismic movement. But none of these gives us an accurate picture of the ‘strength of earthquakes. Nor do they tell us how long it will last and where it will strike. If only there were a method to predict earthquakes with 80% accuracy, then preparedness and response would be a lot more efficient.

It used to seem impossible; after all, studying earthquakes was a complex puzzle that had confounded scientists for decades. That is—until now. Recently, Israeli scientists have discovered a way to predict earthquakes with 80% accuracy 48 hours before they strike. A joint team from Ariel University and the Center for Research and Development Eastern Branch made the discovery.

Their peer-reviewed study, which detailed how the system works, was published in the science journal Remote Sensing in May. With the effects of earthquakes on human ecosystems, this discovery may provide humans with the advantage they need.

How do researchers predict earthquakes with 80% accuracy

Earthquakes, like many seismic events, are quite hard to predict. It’s difficult to read the Earth’s movements. And most scientists struggle to read signals in time to send out warnings.

Granted, there are various ways that scientists currently use to predict quakes. But these don’t tell us how long, how strong, and where these tremors will be felt. In short, though predicting earthquakes is essential, we don’t have a great way of doing it yet.

So, a system that predicts earthquakes with 80% accuracy 48 hours before strikes can help us greatly.

But Israelian researchers have used an unlikely method to trace tremors and quakes: the sky. Or specifically, the ionosphere. This is the part of the sky that meets the vacuum of space.

The scientists used a GPS map of the ionosphere’s total electron content. They combined this with their machine learning techniques on a support vector machine algorithm. Using these two, the researchers calculated the electron charge density of the ionosphere.

Using this method, scientists have been able to predict major earthquakes over the last 20 years. They classified “major” as an event with an Mw of 6 or higher on the Moment magnitude scale. This discovery proved that their systems predict earthquakes with 80% accuracy.

They also managed to predict with 87.5% accuracy areas where the quakes will not hit.

The study was made by Dr. Yuval Reuvani, Dr. Li-Ad Gotlieb, Dr. Nimrod Imbar, and graduate student Said Asali. The study was funded by Israel’s Ministry of Energy and the Israel Science Foundation.

How can this be applied?

Earthquakes carry with them a slew of other risks. Therefore, it’s essential that this discovery can be applied in real-life situations.

With the new tech, countries near the Rim Of Fire may have a better chance of bracing for quakes. Major earthquakes can cause massive destruction to landscapes and communities. This is especially true for individuals living in mountains and other sloped areas. Things get even more dangerous in cities, where people are packed tightly in high-rise buildings.

Worse, aftershocks can trigger tsunami waves, making them dangerous for coastal provinces. Without a solid technology to predict quakes, a robust natural disaster could wipe out entire cities. And often, it takes years to recover from natural disasters.

Furthermore, some studies point out that climate change can increase the likelihood of earthquakes. With rising temperatures, it’s not hard to trigger earthquake faults with rising sea levels. Since temperatures have tipped nature’s balance, there’s no telling which way the causes of earthquakes can come from.

So, a system that predicts earthquakes with 80% accuracy 48 hours before is advantageous, especially if we’re all waiting for the big one. Scientists may soon detect earthquakes two days before they strike.

And for other news stories, read more here at Owner’s Mag!

You may like

Entertainment

FN Meka, the world’s first AI rapper, gets booted out by record label

Published

3 weeks agoon

August 29, 2025By

Carmen Day

Unfortunately, we’ve reached a point where companies use AI to create artist identities. For example, at the 2022 VMAs, Eminem and Snoop Dogg performed with their avatars in the metaverse. And AI rappers are no different. The first AI-generated rapper was FN Meka, who burst onto the scene in April 2019.

Soon enough, he gained a huge following on Tiktok for his Hypebeast aesthetic and larger-than-life personality. In 2021, his Tiktok ballooned to 10 million followers. His popularity prompted Capital Records to sign him on August 14 this year. But, internet users began pulling up records of his questionable online behavior. Ten days later, his label booted him out.

Here’s how it happened.

Apparently, AI rappers exist.

FN Meka’s concept isn’t a true original. In fact, when it comes to virtual rap avatars, you’d probably think of British rap group Gorillaz first.

Brandon Le created the AI rapper avatar to sell non-fungible tokens. However, executive Anthony Martini led the avatar to new heights. Martini signed the rapper to Factory New, a record label he made for virtual artists.

His first single, “Florida Water,” features Gunna and Cody “Cix” Conrod, a Fortnite player. On the day FN Meka signed the deal, the single was released.

The rapper is the first artist to sign in Factory New.

The downfall

A few days after his new record deal, Industry Blackout, an online activist group, called out FN Meka over his questionable actions.

For one, the AI rapper had used the N-word in several of his songs, including his first single. He also mocked police brutality and posted a picture of himself being beaten up by the police.

Plus, FN Meka was criticized for racially stereotyping Black people because of his appearance and aesthetic. Furthermore, rumors began circulating that no actual Black people were involved in his creation in the first place.

Other news outlets also criticized the AI rapper for collaborating with Gunna, who is in jail for racketeering.

The record company has since dropped him. In a statement, the record label offered “their deepest apologies to the Black community.” Because of FN Meka’s actions, the label has cut ties with him “effective immediately.”

More and more problems

It doesn’t end there.

Kyle the Hooligan has come forward as the voice behind FN Meka. And the rapper has dropped new information on the issue. He alleges that the company did not pay him for the first three songs he made for the AI rapper. He also claims to have been ghosted by the creators at around 2021, when FN Meka just started gaining traction.

Of course, this comes as a surprise. Factory New claims that the AI writes the song while the humans only perform it.

Kyle doesn’t know who currently voices FN Meka, and he hasn’t probed it. What we know for sure, though, is that the rapper is based on other trendy rappers like Ice Narco, Lil Pump, and 6ix9ine.

On August 28, Kyle the Hooligan announced that he would file a lawsuit against Brandon Le and Factory New.

There’s a sort of irony in FN Meka. The AI rapper, voiced by a Black artist, is the product of white creators. And some activists and critics even call the AI rapper a new form of blackface. Here, critics argue that anyone can use and adopt Blackness without being Black. Today, a majority of FN Meka’s music and videos have been deleted from TikTok. Martini has also walked away from Factory New and FN Meka, leaving the rapper’s fate hanging in the air.

Top Stories

Meta Sphere AI: New AI Knowledge Tool Based on Open Web Content

Published

1 month agoon

August 15, 2025

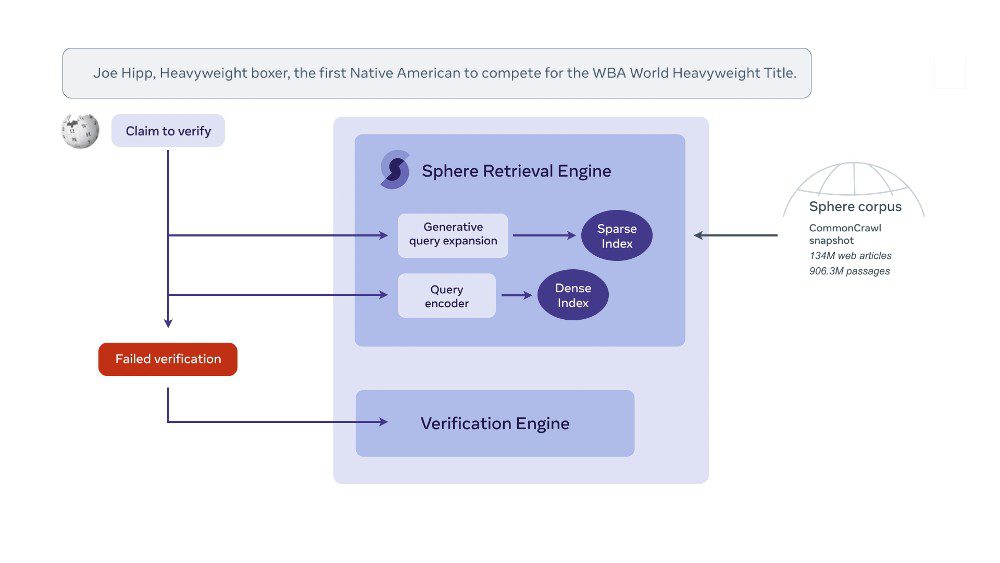

Facebook says it wants to help fight misinformation rampant across the internet in recent years. But it’s an issue it may have helped create in the first place. Facebook parent company Meta announced a new AI-powered tool on July 11 called Sphere. The Meta Sphere AI is intended to help detect and address misinformation, or “fake news,” on the internet. The company claims it is the first AI model that can automatically scan numerous citations at once.

How It Works

The idea behind applying Meta Sphere AI for Wikipedia is quite simple. The online encyclopedia contains 6.5 million entries. On average, it displays almost 17,000 articles added monthly. The wiki concept behind that means that adding and editing content is crowd-sourced. While there is an editorial team, it is still a tiring task that increases daily.

At the same time, the Wikimedia Foundation oversees Wikipedia and has been weighing up new ways of leveraging all that data.

Meta’s announcement regarding a partnership with Wikipedia does not reference Wikimedia Enterprise. Adding more tools for Wikipedia ensures that its content is verified and accurate. So, it will be something that target Enterprise service customers will want to know when considering paying for the service.

Meta has confirmed that there is no financial arrangement in this deal. However, the company notes that training the Meta Sphere AI model created “a new data set (WAFER) of 4 million Wikipedia citations.

What Can Happen in The Future

Based on the comments that TechCrunch got from Meta, here are the things that might happen soon.

- Meta believes that the Sphere’s “white box” knowledge base has significantly more data than traditional “black box” knowledge sources. The 134 million documents at Meta used together to develop the Sphere AI were split into 906 million passages of 100 tokens each.

- By open sourcing this tool, Meta argues it’s a more solid foundation for AI training models and other work than any proprietary base. It concedes that the very foundations of the knowledge are potentially shaky, especially in these early days. What if a “truth” is not reported as widely as fake news? That’s where Meta wants to focus its future efforts in Sphere. Meta intends to develop models to evaluate the quality of retrieved documents, detect potential errors and prioritize more credible sources.

- Meta Sphere AIl raises some interesting questions on what Sphere’s hierarchy of truth will be based on compared to other knowledge bases. Because it’s open source, users may be able to tweak those algorithms in ways better suited to their own needs.

- Meanwhile, Meta has confirmed that it is not using Sphere or a version of it on its platforms like Facebook, Instagram, and Messenger, which have long grappled with misinformation and toxicity from bad actors. (We have also asked whether other customers are in line for Sphere.) It has separate tools to manage its content and moderate it.

- The development seems to be designed for mega scale. Wikipedia’s current size has exceeded what any great team of humans could check for accuracy. The critical point is that Sphere is being used to automatically scan hundreds of thousands of citations simultaneously to spot when a citation doesn’t have much support across the wider web.

While Meta Sphere AI is still in the production phase, it also seems like the editors might be selecting the content, which might need verifying for now. Eventually, Meta’s goal is to build a platform to help Wikipedia editors systematically spot citation issues and quickly fix them or correct the content of the corresponding article at scale.

And for other news stories, read more here at Owner’s Mag!

Ever felt dizzy or nauseous after using your laptop or smartphone? These could be signs of cybersickness. But what is cybersickness? Angelica Jasper, a Ph.D. student in Human-Computer Interaction at Iowa State University, explains its symptoms and how to cope with it

What is Cybersickness?

Cybersickness is a cluster of symptoms during the absence of physical motion, akin to motion sickness. These symptoms are categorized into nausea, oculomotor issues, and general disorientation. Oculomotor symptoms, including eye strain, fatigue, and headaches, involve stressing the nerve that controls eye movement. Disorientation can manifest as dizziness and vertigo. Meanwhile, several cybersickness symptoms overlap categories, such as difficulty concentrating and blurred vision. These issues can persist for several hours and may affect sleep quality.

People can experience the symptoms when using daily devices like computers, phones, and TV. In 2013, Apple introduced a parallax effect on iPhone lock screens that made the background image seem like it floated when a user moved their phone around. But, many people found it extremely uncomfortable. Surprisingly, the reason behind the discomfort is that it triggered cybersickness symptoms.

However, some researchers have different views about why people experience cybersickness. For instance, sensory conflict theory assumes that it is due to a mismatch of information sensed by our body parts that regulate vision and balance. Using every device can cause this conflict between visual perception and physical experience.

Cybersickness in Virtual and Augmented Reality

Cybersickness symptoms are perceived to become more intense with virtual reality (VR) and augmented reality (AR).

VR is widely available through popular gaming platforms like Facebook’s Oculus devices and Sony PlayStation VR. VR can trigger severe levels of nausea that increase with the duration of use. Also, this can cause some applications and games unusable for many individuals.

AR, on the other hand, are head-mounted devices that allow the user to see what’s in front of him. This includes games like Pokémon Go, accessible on your phone or tablet. Prolonged use of AR devices can result in more severe oculomotor fatigue.

A rise in the popularity of owning AR and VR devices can trigger an increase in cybersickness symptoms. Research and Markets estimate that adopting these technologies for various purposes may grow over 60 percent and reach USD 905.71 billion by 2027.

Cybersickness Symptoms Is Dangerous

While cybersickness symptoms may initially appear mild, they can have enduring effects over time. This may not seem like a big issue initially, but lingering symptoms may affect your ability to function well and could be dangerous. Symptoms such as severe headache, eye strain, or dizziness could affect your coordination and attention. If these symptoms persist while driving, it could lead to a car accident.

How to Deal With Cybersickness

If you are experiencing cybersickness symptoms, there are a few ways to ease the discomfort.

- Use blue light glasses to block out some of the blue light waves on your device screen.

- Zoom in your device screen or use larger font sizes to lessen eye strain and make daily work more sustainable and productive.

- Adjust devices visually, so your eyes are as comfortable as possible.

- Use devices in open spaces to reduce the risk of getting injured if you get dizzy and lose your balance.

- Take a short break if you start to feel any discomfort.

Proper Use of New Technology

The work-from-home trend has grown as a result of the COVID-19 pandemic. Commuting to work at an office setup was replaced with staying at home and enduring endless Zoom meetings. Even though the convenience is undeniable, it has also come with an increasing awareness of how hard it can be to look at a screen for over 40 hours per week.

However, don’t let cybersickness affect your motivation. As researchers continue to find ways to address cybersickness across all devices, people may be able to enjoy advancements in innovative technologies in the future without feeling uncomfortable.

Top 10 Public Relations Tools Every Entrepreneur Should Check Out

What the Hell Was Magic Dirt? The Black Oxygen Organics Hoax

Unlimited Graphic Design Companies Of 2025 + Promo Codes (Updated)

What’s the Best Creative Design Company for you?

What’s the Best Subscription-Based Graphic Design Service in 2025?

What’s the Best Unlimited Graphic Design Services Agency in 2025?

Top 10 Shipping Software Options for Businesses

Unlimited Graphic Design Companies Of 2025 + Promo Codes (Updated)

Top 10 Pet Tech Products That Redefine How We Care for Pets

What the Hell Was Magic Dirt? The Black Oxygen Organics Hoax

Is This Business an MLM? The Color Street Reviews

Top 10 Public Relations Tools Every Entrepreneur Should Check Out

Top 10 Shipping Software Options for Businesses